Cloud computing in modern distributed systems | Mediterranean College

Abstract

Distributed systems are simply a network whose components, located on different connected computers, which communicate and coordinate their actions by passing messages to one another with the use of databases, networking, software creating flexible resources, and economies of scale.

ALEX is an ambitious project that I designed and implemented under the academic needs of Mediterranean College. It is a common cloud-based file-sharing system, that describes the separate components of a distributed system.

I. DISTRIBUTED SYSTEMS

Definition:

“A distributed system is a collection of autonomous computing elements that appears to its users as a single coherent system.”[1]

When talking about distributed systems and cloud computing we immediately think of typically, any business use only one cloud service, or internal network, helping it to lower its operating costs.

Since it is it immediately affected the progress and effort to ameliorate distributed systems and increased the focus on the interconnected systems in general.

the delivery of computing huge services like social media, Microsoft 365, Owncloud and many more, that are using servers, to store remotely data, over the Internet to offer faster communication, therefore, innovation by distributed systems, we can immediately understand, that their components are located on different networked computers, and even geographical locations, but they communicate all together, by sending packets with information mostly through the protocols FTP and HTTP, the main reason behind their increasing acceptance is perhaps necessary as they allow scaling horizontally. For instance, traditional databases that run on a single computer require users to upgrade the hardware to handle increasing traffic (vertical scaling). Its biggest issue is that any hardware would prove to be insufficient and obsolete after a certain time.

Horizontal scaling also allows managing increasing performance demands by adding more computers instead of constantly upgrading one single system which is certainly more expensive. They are inherently scalable as they work across different machines. In this way, it is simpler to add another machine to handle the increasing workload instead of having to update a single system over and over again. There is virtually no limit on how much a user can scale. A big advantage is that under high demand we can run each machine to its full capacity and take machines offline when the workload is low

Knowing that the components of distributed systems can be multiple, and act autonomously. There are great benefits in using a distributed system such as better price to performance ratio, geographical independence, and last but not least, fault tolerance. Some of the advantages of a distributed system are:

1. Reliability: A distributed system should be consistent, secure, and capable of masking possible errors.

2. Performance: Distributed models perform much more efficiently and stable than other systems. And the are more efficient since they allow breaking complex problems/data into smaller pieces and have multiple computers work on them in parallel, which can help cut down on the time needed to solve/compute those problems.

3. Low Latency: Any user can have a node in multiple geographical locations, and distributed systems allow the traffic to hit it at its closest, resulting in low latency and better performance.

4. Scalability: Distributed systems are scalable, respecting different clock zones (geography), size and administration, giving the benefit of flexibility.

5. Redundancy and Fault Tolerance

Distributed systems and clouds are also inherently more fault-tolerant than single machines. By using a backup server or data center, the system would work even if one data center shuts down or goes offline. This means more reliability as in the case of a single machine everything goes down with it.

There are a few disadvantages though, that one can observe in a distributed system:

1. Security: They are more vulnerable compared to centralized systems. Not only the network itself has to be secured, depending on their role (Administrator, editor…) users also need to be careful with the use of passwords for instance and control replicated data across multiple less secure locations.

2. Complexity: They are more complex than other types of systems, for instance, the software has to be designed for running on multiple nodes at the same time, which results in higher cost and more complexity.

3. Administration:

The administration of a distributed system is a hard task. Databases can be controlled fairly easily in a centralized computing system, but it’s not an easy job to manage the security as we saw above

. Two of the most common architectures being used in the distributed systems implementation, are client-server and peer to peer architectures.

Client-Server Architecture: This type of architecture reminds a lot of the producer-consumer concept.

Such an application is implemented on a distributed network, which connects the client to the server. The server of that architecture provides the central functionality: i.e., any number of clients can connect to the server and request that it performs a task. The server accepts these requests, performs the required task, and returns any results to the client, as appropriate. These services can be file sharing, application access, storage, etc. The communication starts when the client sends a request to the server over the network. Then, the server responds to the client, providing the data requested. The major drawback of a system like that is the fact that the server can potentially be a single point of failure, meaning that if a failure occurs, the service cannot be acquired by the client. Thus, financially big companies, invest to backup servers or clouds for redundancy. Another noticeable disadvantage in the client-server model is the fact that resources might become meager if there are too many clients or not enough bandwidth. Clients, generally, increase the demand on a system, providing zero contribution to it.

The solution would be to update the hardware and software as we said before. But when the demand gets lower the resources remain unused. It is the problem of providing a peak load.

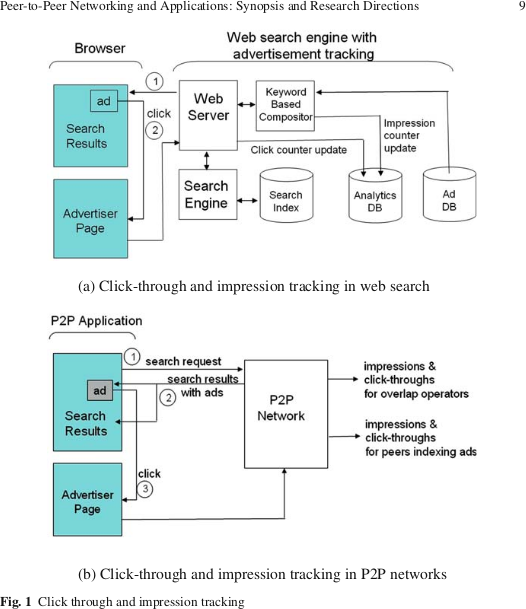

fig [e]Peer to peer architecture:

Compared to a client-server system, that is more appropriate for service-oriented procedures, peer to peer architecture, or abbreviated P2P is more suitable for different kind of tasks. Peer to peer terminology refers to distributed systems in which the workload of the system is divided equally, among all the components, as opposed to client_server of it. All the computers in a peer to peer distributed system, contribute the same processing power and memory. Thus, if the size of a system like that increases, the capacity of its computational resources increases too. Peer-to-peer networks are generally simpler but they usually do not offer the same performance. The P2P network itself relies on computing power at the ends of a connection rather than from within the network itself.

fig[t] P2Pnetworking applications:

The P2P value proposition [5] “...for the user is to exchange excess computa-tional, storage, and network resources for something else of value to the user ,such as access too the resources, services, content, or participation in a social network. ”The rapid gains in computer capacity and wide adoption of broadband access have thus fueled theire growt. As these trends are expected to continue, we set the following questions:– Given the limited ability of search engines to index all of the web, can P2P search compete with or augment websearch.

Some of the most common applications of peer to peer systems are data transfer and data storage. For example, in the data storage scenario, all the computers that are involved, store a portion of the data and there might be copies of the same data in different machines. So, if a computer fails the data that was on it can be restored relatively easily from other copies stored in the rest of the machines.

A few advantages of peer to peer distributed systems are

a. Cost: Any common device is required, there is no need for an expensive server, a fact that reduces the cost significantly.

b. Specialization: Each user in a system like that, manages its computer, minimizing the need for a network administrator or an IT manager in general.

c. Network traffic: The traffic is reduced compared to a client-server model.

d. It is easy to install and so is the configuration of the components on this network,

e. All the resources and contents are shared by all the peers, unlike server-client architecture where the Server shares all the contents and resources.

P2P is more reliable as central dependency is eliminated. Failure of one peer doesn’t affect the functioning of other peers. Whereas In the case of Client-Server network, if the server goes down the whole network gets offline.

f. There is no need for a full-time System Administrator. The user is the administrator of his machine and can control their shared resources.

Obviously, there are a few disadvantages as well. A few worth mentioning are:

No organization: The potential data can be stored on any computer, making the organization of it zero, the whole system is decentralized thus it is difficult to administer. That is one person cannot determine the whole accessibility setting of whole system.

Increased workload: Each machine is virtually fulfilling both server and client roles, meaning that the workload of each machine is increased.

Security: Security, backing up data and administration in general, is down to every user individually. In this system is very less viruses, spywares,trojans, etc malwares can easily transmitted.

2. CLOUD COMPUTING.

A sub-category of distributed systems, Cloud computing, has become a very lucrative and big field, with an increasing number of users supporting this type of model, Owncloud, LinkedIn Google apps, YouTube, Microsoft Office 365, are a few of the most popular applications that are cloud-based, concluding that cloud-based infrastructures are dominant in the present and are here to stay as shown by the statistics above:

f ig[c] Size of the cloud computing services market from 2009 to 2022

“The statistic shows the size of the public cloud computing market worldwide from 2009 to 2022. This encompasses business process, platform, infrastructure, software, management, security, and advertising services delivered by public cloud services. In 2020, the public cloud services market is expected to reach around 266.4 billion U.S. dollars in size and by 2022 market revenue is forecast to exceed 350 billion U.S. dollars. ”[1]

Cloud computing has become extremely popular in recent years, with well-known technological colossus following this type of systems, in order to provide their services. Owncloud & Nextcloud, Microsoft Office 365 suite and Skype, Google with Google Drive, Apple with iCloud, Dropbox with Dropbox and Adobe with Adobe Lightroom CC are a few examples. But what is cloud computing and what does it mean?

“The on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. The term is generally used to describe data centre available to many users over the Internet. Large clouds, predominant today, often have functions distributed over multiple locations from central servers. If the connection to the user is relatively close, it may be designated an edge server.” [2](Cloud computing, 2020)

Cloud means that we can access and manipulate data stored online, instead of a local hard disk drive. In other words, cloud is a computer that is accessible remotely, anytime, from anywhere.

Cloud computing architecture relies on 3 core components.

Client: The client the user that uses a device in order to access the services and applications related to the specific cloud.

Cloud Network: It is an interface between the client and the provider. Every service that is cloud based is accessed through the network.

APIs: A cloud API includes the instructions that implements from the users.

2.1 Service models

Cloud computing is divided in three major categories, depending in the service it provides. This type of classification is necessary, in order to distinguish the role that a particular cloud service fulfils and how that service accomplishes its role. These models are Infrastructure as a Service or IaaS, Software as a Service or SaaS and Platform as a Service or PaaS.

IaaS: (Infrastructure as a service) IaaS is related to the sharing of hardware resources, in order to execute specific services. They are self-service models for accessing, monitoring, and managing remote data center infrastructures, such as compute (virtualized or bare metal), storage, networking, and networking services like firewalls. Instead of having to purchase hardware outright, users can purchase IaaS based on consumption. The services include, storage, network bandwidth and processing, that are required to set up a computer environment. It can potentially include applications or operating systems. In the case of IaaS, it is provided infrastructure for on-demand services and APIs for interaction between routers and hosts are being used. In general, the user does not manage the hardware in the cloud infrastructure, but he deploys the applications and controls the operating systems. The provider on the other hand, is responsible for running and maintaining the applications Amazon Web Services (AWS), Cisco Metapod, Microsoft Azure, Google Compute Engine (GCE), are examples of IaaS ‘s services

Compared to SaaS and PaaS, IaaS users are managing applications, data, runtime, middleware, and operating systems.

Providers still manage virtualization, server, storage, and networking.

SaaS (Software as a service):

In this type of model, a third-party provider hosts application and makes them available to clients. This is accomplished over the internet. The consumers release their applications in a hosting environment. The end user only needs to run an internet connection, because the need of a local installation of the software is not required. Instead, applications are used online, with files saved in the cloud and not locally. Microsoft Office 365, Google Docs and Dropbox are 3 well-known SaaS applications. So because of the web delivery model, SaaS eliminates the need to install and run applications on individual computers.and SaaS makes easy for enterprises to streamline their maintenance , because everything can be managed by vendors.

PaaS (Platform as a service)

The cloud provider delivers hardware and software services, usually those needed to execute applications, to the users. They are used for applications, while providing cloud components to software. The gain with PaaS is that a framework can build upon to develop or customize software. It makes development, testing, and deployment of applications quick, simple, and cost-effective.

With this technology, enterprise operations, or a third-party provider since the software and hardware are provided on provider’s infrastructure, the user can manage Operating systems, servers, storage, networking, and the PaaS software itself. Developers, however, manage the applications. This fact, results to the lack of need to install hardware and software to execute a new app by the clients.

A few examples worth mentioning are RedHat, Heroku and Microsoft Azure.

2.2 Deployment Models

A cloud deployment model is a definition regarding parameters of the cloud, such as accessibility and storage size. There are four main cloud deployment models, private, public, hybrid and community.

Private: In this case, the cloud infrastructure is available for exclusive use, by a single organization. The cloud might be owned and managed by the organization itself or a third party. The server can be hosted internally or externally, depending on the needs of the organization.

Public: In public clouds, the infrastructure is available by the general public and is designed for open use. It might be owned and managed by an organization, governmental or academic and the server is located in the premises of the provider.

Hybrid: In hybrid model, the infrastructure is a combination of two or even more separate cloud infrastructures, that remain unique but bound together by technology that enables portability in terms of application and data. The infrastructure might be public or private.

Community: In the case of community deployment model, the infrastructure is available for a specific community of users that have shared concerns. It may be owned or managed by one ore more of the organizations of the community, or a third party and the server may be located inside or outside the premises.

2.3 Limitations and advantages of Cloud Computing

Cloud computing improved a lot from the perspective of how the storage of data is made, and application development is implemented in the computing world.

In this essay, we will try and provide a few very strong advantages but also a few drawbacks as well.

Advantages

Cost:

Perhaps the most significant benefit of cloud computing is cost-effectiveness. It

minimizes the cost of in-house server storage as well as the associated costs regarding the maintenance of such machines. Air conditioning, ventilation power, and administrative costs are a few of them. Also, you do not need trained personnel to maintain the hardware. The buying and managing of equipment are done by the cloud service provider.

Accessibility:

Cloud-based files can be accessed from any device, everywhere in the world.

Strategic edge:

They offer a competitive edge. It helps you to access data and applications any time without spending your time and money on installations.

Mobility:

With internet access users working on the premises or at remote locations they can easily access all the data, and work remotely with not further cost or hardware.

Reliability:

With a managed service platform, cloud computing is significantly more reliable and consistent compared to in-house IT- infrastructure.

Most of the cloud providers offer an agreement that guarantees the availability of the service 24/7.

Security: In case a server fails, hosted services and applications can be transferred to another available server, increasing the redundancy.

Quick Deployment: Cloud computing gives you the advantage of rapid deployment. It means, your entire system can be fully functional quickly.

Disadvantages :

Planning and setting up a large cluster is highly nontrivial, and a cluster may require special software, etc

Technical Issues: Cloud is always prone to technical issues. Even, the best cloud service provider companies face this type of trouble despite although their high standards of maintenance. fig[d]

As of January 2019, studied specialized administrators, chiefs, and professionals of cloud innovations from around the globe demonstrated that the greatest difficulties of utilizing distributed computing innovation inside their associations were identified with administration and absence of assets/aptitude. “Around quarter of respondents saw administration as a huge test, while another 54 percent saw it as to some degree a test. Other normally refered to challenges included security, consistence, and cloud the executives.”

Security: Despite cloud service providers implement very strong security protocols, protecting their infrastructures, storing valuable data on external service providers is always risky. Using cloud-based services means that you give tour provider access all your company's sensitive information to a third-party cloud computing service provider.

Limited Control: Since the cloud service is owned, managed and maintained only by the provider, it transfers limited control over the customer. The customer only controls applications and services regarding the front end. Thus, administrative tasks such as firmware updating, and shell access can only be controlled by the provider.

Downtime: This is a very important drawback of the cloud-based services. Although providers have managed the concept of redundancy very well, there are cases in which the services of the provides could be temporarily unavailable. Additionally, internet connection is always required to have access to cloud services, meaning that internet connection failure, leads to the unavailability of the applications or files that are stored in the cloud.

ALEX PROJECT

Design

ALEX is a cloud-based file sharing system, that uses storage and computational infrastructure. It is composed by several components, such as file server, database server, application server and a backup server that is used for redundancy. These components interact together, in order to achieve the best possible functionality of the cloud, providing an efficient and pleasant experience to the end user. Finally, the end user can upload, download and delete files in the cloud storage. The access can be achieved from everywhere, anytime as a typical cloud-based system. Internet connection is mandatory.

Application Server: This is the server where the cloud application is running. The client gains access and can see the “front-end” of the app. The interface is simple and user friendly. In the first step, the user is asked to provide a username and a password in order to register or log in. The very first time the registration is necessary, in order for the database server to store the credentials provided by the user. After successfully logging in, the user can interact with the interface of the application. In this section he can upload, download or delete files from the cloud, using the dedicated buttons.

Database Server: This is the server where the credentials of the users are stored. After the registration of a client, the username and the password he provided are stored in the database giving in every single user a unique ID. Every time a client is logging in, these credentials are being checked by the server, in order to accomplish the identification procedure and let the client to gain access in the application environment.

File Server: The file server is a machine where all user’s data is stored. It interacts with the application server, in order to guarantee the availability of the files. After the successful log in, the user interacts with the application server. The application server communicates with the file server in order to retrieve or add files, requested by the user. The files stored by the user are duplicated in a secondary hard disk drive for redundancy purposes.

Backup Server: The backup server is the computer that serves the role of the application, database and file server together, in case one of the above is down. This fact guarantees the availability of ALEX 24/7/365.

3.2 System Analysis of ALEX [fig2]

a. Workflow

The ALEX cloud is a client-server distributed system. The client sends a request to the application server. After the application server receives the request, the 3-way handshake (TCP/IP) and the server opens a socket for the particular client.

Since communication through sockets has been established. In this part of the procedure, the client has to sign in in order to interfere with the cloud, and anything stored in the server database.

So the next step is the register/ log in procedure. The client is asked whether he wants to register (first time only) or to log in. At this point, the database server takes place. The application server communicates with the database server and the credentials of the user (username and password) are stored in the SQL database with the use of a simple PHP script. Every time the user wants to log in, the application server will communicate with the database server, in order to identify the specific user and grand access to his account passwords are cryptographed with the use of jQuery MD5 Plugin that can be found here: https://github.com/placemarker/jQuery-MD5

Then the user interacts with the second part of the front end, the actual content of the cloud. At this point he can see the “upload”, “download” and “view files” buttons. By pressing the “upload” button, he can upload his files, by pressing “download” button he can download his files and by pressing “view files” button he can see his uploaded files and delete any of them. After pressing the buttons mentioned above, the application server runs the back end of the application and communicates with the file server, the computer that stores the files. In this case, the application server sends or receives packets with data, to or from the file server, depending on the button pressed. Eventually the user can interact with his files, in the way he wishes.

. Implementation / Programming languages

In this section, I would like to describe the programming languages/tools used in order to achieve the implementation of the ALX project.

Application Server

Front end: In order to implement the frontend GUI, I used the programming languages: CSS, HTML 5, JavaScript.

Back end: Because I wanted an opensource program the back end of the back-end had been implemented in JAVA since it can be used everywhere: to create complete applications that can run on any operating system or be distributed across servers and clients in a network., using IntelliJ IDEA.

Java as a multiplatform is well known for being neutral, almost completely portable, and compatible. So it was the best choice for an opern source model, knowing that completeness and neutrality are important for interoperability and portability

“.Interoperability

Characterizes the extent by which two implementations of systems or components from different manufacturers can co-exist and work together by merely relying on each other’s services as specified by a common standard.

.Portability characterizes to what extent an application developed for a distributed system A can be executed, without modification, on a different distributed system B that implements the same interfaces as A”[6]. To organize DATA I had to go through, PHP and MySQL a reliable, scalable, and fast laguage, Easier to master in comparison with another database software like Oracle Database, or Microsoft SQL Server. On top of that just like JAVA, MySQL can run on all platforms UNIX, Linux, Windows, etc. So, It will be compatible with any device or OS used by the ALEX cloud no VM installation or plugin is required.

File Server

My Opreating system woud certainly be simple GNU/Debian OS, a free and open-source software in order to protect the intellectal work of my users, some of advatage are that:

“

Not allowing use of their code in proprietary software. Since they are releasing their code for all to use, they don't want to see others steal it. In this case, use of the code is seen as a trust: you may use it, as long as you play by the same rules.

Protecting identity of authorship of the code. People take great pride in their work and do not want someone else to come along and remove their name from it or claim that they wrote it.

Distribution of source code. One of the problems with most proprietary software is that you can't fix bugs or customize it since the source code is not available. Also, the company may decide to stop supporting the hardware you use. Many free licenses force the distribution of the source code. This protects the user by allowing them to customize the software for their needs.

Forcing any work that includes part of their work (such works are called derived works in copyright discussions) to use the same license.”[7]

Its role is crucial, and I made a lot of research before choosing it to store and control access to all the DATA called through Java or PHP functions on my serever from the back end of the application server. But one of the most important parts is that it had to be scalable.

In his book B. Clifford Neuman claims that scalability of a system can be measured along at least three different dimensions:

Size scalability: A system can be scalable with respect to its size, meaning that we can easily add more users and resources to the system without any noticeable loss of performance.

Geographical scalability: A geographically scalable system is one in which the users and resources may lie far apart, but the fact that communication delays may be significant is hardly noticed.

Administrative scalability: An administratively scalable system is one that can still be easily managed even if it spans many independent administrative organizations.”

ig9:Leading operating systems in Hungary as of March 2020, by market share

And GNU/Debian software can ofer me that. My problem would be of course the investment for more computing power. The figure above proves it;s superiority:

Conclusion

After analysing varieties of known distributed systems and more specifically cloud computing, we can understand the importance of their role in the technology world. The cloud’s main appeal is to reduce the time to market of applications that need to scale dynamically. Increasingly, however, developers are drawn to the cloud by the abundance of advanced new services that can be incorporated into applications, from machine learning to internet of things (IoT) connectivity.

Software developers seem to approach the data storage and use of applications in a completely different way, compared to the “traditional” way of running programs locally, on their hard-drive.

X available to the public ALEX project was inspired by some of them, trying to emulate the functionality and usability of the most well-known cloud services. Of course, that is a challenge and it a difficult procedure. Although a lot of information is available on the web, and a cloud-based file-sharing system contains many difficulties, but the outcome seems satisfying.

ALEX can compete to yet the best, neither in terms of performance nor in terms of security, considering the time, budget and experience available. But still, it is a functional and stable application, providing the necessary performance, security, and ease of use to the end-user whit the hope of developers noticing my work, since it is completely open-sourced maybe someday enough applets and plugins will be fixed so it is used widely.

Bibliography

[1]Adelstein F, Gupta S, Richard G, Schwiebert L (2005) Fundamentals of mobile and pervasive computing. McGraw-Hill, New York

[2] Sampaio, A., Costa Jr, R., C. Mendonça, N. and Hollanda Filho, R., 2013. Implementation and Empirical Assessment of a Web Application Cloud Deployment Tool. Services Transactions on Cloud Computing, 1(1), pp.40-52.

[3]Almeida, J., van Sinderen, M., Quartel, D. and Pires, L., 2005. Designing interaction

[4]Büning, H., 1995. Zohar Manna and Richard Waldinger. The logical basis for computer programming.Volume I. Deductive reasoning. Addison-Wesley series in computer science.

[5]26. J. Buford, H. Yu, and E. K. Lua. P2P Networking and Applications. Morgan Kaufmann 2008.

[6]Blair G, Stefani J-B (1998) Open distributed processing and multimedia. Addison-Wesley, Reading

[7]Debian.org. 2020. Debian -- What Does Free Mean?. [online] Available: <https://www.debian.org/intro/free> [Accessed 19 April 2020].

[8] Neuman B (1994) Scale in distributed systems. In: Casavant T, Singhal M (eds) Readings in distributed computing systems. IEEE Computer Society Press, Los Alamitos, pp 463–489

Figures

[a] Statista. 2020. Public Cloud Computing: fig Market Size 2009-2022 | Statista. [online] Available at: <https://www.statista.com/statistics/273818/global-revenue-generated-with-cloud-computing-since-2009/> [Accessed 16 April 2020].

[b] System Analysis of ALEX

[c] Size of the cloud computing services market from 2009 to 2022 Statista. [online]at: <https://www.statista.com/statistics/273818/global-revenue-generated-with-cloud-computing-since-2009/> [Accessed 16 April 2020].

[d]Challenges of using cloud Computingworldwide as 2019

[e]Statista:Leading operating systems in Hungary as of March 2020, by market share by Statista Research Department, Mar 2, 2020

[t]Khalid H. Almitani, K., 2017. https://marz.kau.edu.sa/Files/320/Researches/70650_43625.pdf. journal of King Abdulaziz University Engineering Sciences, 28(1), pp.67-90.